Back in 2012, when I started my first Position as a Backend-Engineer, our team’s main goal was to improve Scalability and Availability, to support more customers and larger workloads.

It cost my team leader and me many nights and weekends, not to mention the number of emails and calls coming from management repeatedly asking for status.

The months that followed were challenging, exhausting, solving problem after problem, and giving less attention to code new features and more on stabilizing and reverse-engineer old components.

Still, it was a constant pain. You cannot keep marketing your product without making sure your system is up for the challenges it may bring up.

Let us first ask ourselves, what are the qualities you expect from any software application? What are the aspects that a professional developer should know?

Consistency

how consistent is my application in terms of:

- If you give me the same input over and over again, will I provide the same result? That’s not obvious, especially if you fetch data from external resources that you have no control over.

- Is the performance more or less similar for the same load?

- will all your users be exposed to the same information

Do not think that inconsistency necessarily points to a malfunctioned application. For specific features, you would rather have un consistent results than a low latency response.

For example, if you manage a large e-retail store that provides a recommendation list that should be updated after each purchase, how important is it to have that list refreshed as soon as possible? Would you postpone essential transactions so that the customer will have it up to date?

Availability

What’s the uptime I can promise my customers? Are there any single points of failure? It may be a complicated question when you have many different components, and each has its probability of shutting down. On that note, you also need to ask yourself if you have proper fail-over procedures and an alert system.

We should also ask ourselves, how do we provide an SLA(service level agreement) when using external resources? Especially when the SLA is not dependent on us but on another company’s product which we have no control of:

- We should never stop serving customers solely because 1 of our external providers collapsed, should provider X crash down or return a delayed response, how will it affect your application?

- Don’t ever be dependent on a single external resource, also make sure you have 2–3 more resources providing the same type of service but don’t overdo it. The trade-off is merging more pieces of data and trust me. It’s not simple.

- Use timeouts and consider wrapping the logical component, which sends the requests, with a circuit breaker. There is nothing worse than a slow response, even one which, eventually, returns a proper result.

- Pessimist Development

Yeah, sounds pretty dire, but we should always assume the worse case, and it doesn’t have to be on our account, especially in the open-source world where we live. Check out some stability & capacity patterns that may help you confine errors to certain parts of your application instead of crashing down the entire system. Release it By Michael T. Nygard extrapolate on these matters pretty well, and I highly recommend you to read it, worth every penny.

Stability

The ability of an application’s architecture to handle growth in workloads and what is required for it to manage growth, here you should be familiar with two terms :

- Horizontal Scaling (scale-out) — adding more machines to the component’s node cluster. Ideally, it will produce linear scalability.

- Vertical Scaling (scale-up) — boosting hardware power (CPU, SSD, etc.) to already existing clusters.

Each of the above is flexible and is very dependent on the architecture of your application.

now lets get to the fun stuff

Types of performance testings

There are three general approaches for testing an application :

Micro-Testing

Monitor and test a single logical unit

Efficient for bug detection since it focuses on small units.

Not realistic to create 1 for each unit, you may write many Junits to verify correctness and consistency. Still, when it comes to latency, it might be utterly pointless, given that your bottleneck can be somewhere else.

Complex systems are more than the sum of their parts.

Macro-Testing

measure performance by using the application itself

Includes all aspects of putting all components together (sockets, connections, inconsistent data transfer, storage engines being accessed by several different elements, etc.)

Finds the bottleneck of your entire system, and as we all know, there is no point in tuning 1 part of the system when another limits its inbound work units.

Might not produce synchronization and thread contention problems under stress since the capacity, again, is limited by the bottleneck’s throughput.

Meso-Testing

Test sets of logical units that together responsible for a more extensive task

Easier to monitor

As we mentioned before, stressing the components each on its own, without the effect of others, may reveal to us more thread-contention problems since the application’s bottleneck will not limit the component input.

CPU Usage

Low CPU under stress test

if the CPU is low under stress, try increasing the number of work units (maybe your throughput improved since the last time you tested). If it doesn’t go any higher, it means that your application is standing idle for a long time waiting for synced locks to be released or I/O responses and its time to dig into I/O monitoring and profiling the application.

High Cpu yet low throughput

have a look at the memory status. It might be due to high GC CPU usage(use a profiler to see GC CPU usage), a rule of thumb when it comes to GC -> if GC is consistently hitting the 10% CPU mark, then something is not right, be sure of that.

If memory is not an issue, you should start a profiler and find CPU-intensive calls.

How to monitor CPU?

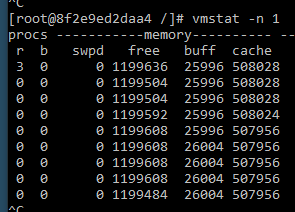

Vmstat n — Prints out machine’s state every n seconds. I use it mostly to view the run queue’s size, which may indicate thread contention issues.

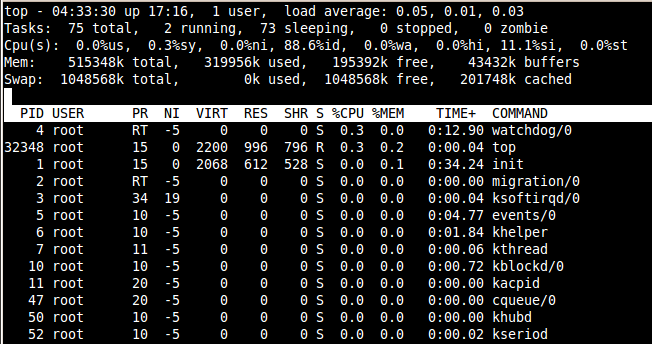

Top — Some similar stats as VMSTAT, along with an allocation of resources to every process. I use it mainly to pin down the process, which is causing performance regression, and after doing so, I inspect that process separately.

Profilers — Visualvm, Yourkit, java mission control(if you use java8), and there are many others.

Run Queue - Examine contention

The number of threads waiting in the run queue will ideally equal to the number of cores. If it’s too low then you can increase your workload, if it’s too high, then you should consider reducing the size of your thread pools and begin profiling the application for CPU-intensive tasks.

Why do we want to keep it no higher than the number of cores?

Well, think about what happens behind the scenes on the operating system, specifically on the schedule, which is assigned with the role of providing CPU core time slices to the threads waiting on that queue. When a thread is not finished with its work, pulled out, and goes back to the run queue, this involves saving its current state in memory and loading another’s into registers. That context switch can bring to a significant regression, especially if it happens too often. As mentioned on your right-hand side — I use VMSTAT to monitor the run queue.

In the above example, I requested the CPU to run VMSTAT every second, in the ‘r’ column, you can see the number of threads waiting in the run queue if this number exceeds the number of cores to extense, it means you have way too many threads running.

Synchronization — do we really need it ?

Given the risks of false implementation, time to develop a thread awareness feature, and the performance implications, first think carefully if synchronization and shared data are required. Honestly, it doesn’t impress anyone if you synchronize your code for no good reason. I’ve seen all sorts of sophisticated synchronization mechanisms that were quite impressive for an interview exercise. However, they were not only redundant but also caused a deterioration of the entire system. Avoid synchronization and try looking for alternatives:

Modifying Stateful objects into Stateless

For instance, revise your decision to wrap an object with a singleton.

Immutable Objects

That way, you can also prevent other developers from creating chaos. For instance, The number one mistake with different kinds of in-memory cache implementations that I’ve encountered was cached mutable objects, which were later on modified by developers.

Using Cas(check & set) Objects

Based on the concept of optimistic locking, Cas is Volatile thread-safe objects (in other words not cached and synced into memory). The advantage is that they do not cause any locking, yet given their implementation, which keeps the thread up, I do not recommend using them for a highly contented code.

Note: do not forget how crucial encapsulation is in the aspect of data synchronization, think how simple it is to maintain a single class containing critical data-members than working your way around multiple components where the data is accessed.

Disk usage and I/O

- Page swaps — When a process’s virtual memory is higher than the currently available physical memory, the CPU will keep part of the pages on disk. In such a case, you will have to consider scaling up with more physical memory or figure out how come your process consumes so much memory.

- a high number of I/O ops for low data transferred.

- Many tools can expose metrics regarding disk performance, for example, Zenus, Prometheus, Grafana, VMSTAT, and most profiles.

Logs

Logs are incredibly crucial for analyzing an incident. If you have to reproduce the problem on staging, to understand what happened, or even worse, start debugging, then it means you are missing some essential log messages.

Centralize Crucial info — You should also centralize error level logs to a single platform. For this purpose, you may use Logstash, Flume, or other technologies.

Purging — make sure that logs are occasionally purged; no one is interested in last month’s logs, especially when it wakes people up in the middle of the night when the disk space runs out. If you use Log4j, I recommend that the log Appender to be set to Rolling file appender, which sets up a maximum size for the log file, when surpassed, Log4j will truncate old messages.

Database

Database engines, like many other technologies, have or should have monitoring tools that enable us to look closely at its activity. If the capabilities are not enough for our use cases (and that goes to all third-party technologies, not just DB), then we should reconsider our decision to use that particular database. Trust me. There is nothing worse than being kept in the dark for what is happening and start peering into third-party code out of desperation when something goes wrong (Sadly, I have been there quite a few times).

There are several capabilities that I find crucial.

Show Query Plan — In MYSQL, MongoDB and Couchbase(NIQL), you may call EXPLAIN before the query call, and in return, you’ll receive details regarding the query plan such as which index was used, how long did it take, how records were scanned, etc. That is a vital tool that makes it easier for you to understand how you can optimize your queries.

Slow Query Log

Prints all slow queries to a log file, in MYSQL it’s a separate log, in MongoDB it’s part of the profiler log (you need to set to profiler level to 1 or above), Reddis also has its slow log. It is exceptionally crucial unless you like playing guesses or use profilers to find out what happened, you can even have that file monitored regularly.

Live Monitoring

Tools to view current activity on the database. In MongoDB, we have Mongotop, Mongostats, Mysql has Innotop, Mytop, Couchbase has its monitoring console, to cut things short, all decent databases do in some way or the other.

For relational databases — Locking information — you may view all queries that were involved in a deadlock by using the command “SHOW ENGINE engine_name.”

To summarize

when you learn about database technology, don’t forget to inspect its monitoring tools before deciding that its outstanding performance is the only reason to use it. You might be surprised how much time can be saved when you know how to monitor the database accurately.

Profilers

There are two methods we can use to profile an application

Sampling — Collecting metrics within a constant limited time with a constant delay between one sample to the next, this method reduces the overhead the profile has on the application and also enables us to stop its action when done profiling. However, you might lose important information during the time the profiler does not sample the running environment.

Instrumentation — An agent attached to the application, wrapping methods with its code that collect the required data. Of course, here the overhead is much more significant, and you cannot attach the agent on run time, it should be set up front.

What’s important here is to understand the differences; there are many different profiles out there, each with its advantage and shortcomings. Therefore, writing about them is not within this post’s scope, and perhaps we will do it later with the main focus on those offered for free.